How does Web Scraping Job Posting Data unlock insights from leading job platforms?

Introduction

In the current competitive job market, businesses, recruiters, and researchers require vast amounts of job posting data to gain insights into hiring trends, salary benchmarks, and in-demand skills. Web Scraping Job Posting Data from various job platforms offers real-time information crucial for staying ahead in the labor market.

Job Posting Data Collection utilizes Web Scraping techniques to systematically gather structured job-related information from career websites, corporate portals, and recruitment platforms. This blog delves into effective methods, industry best practices, and the challenges of extracting job posting data efficiently.

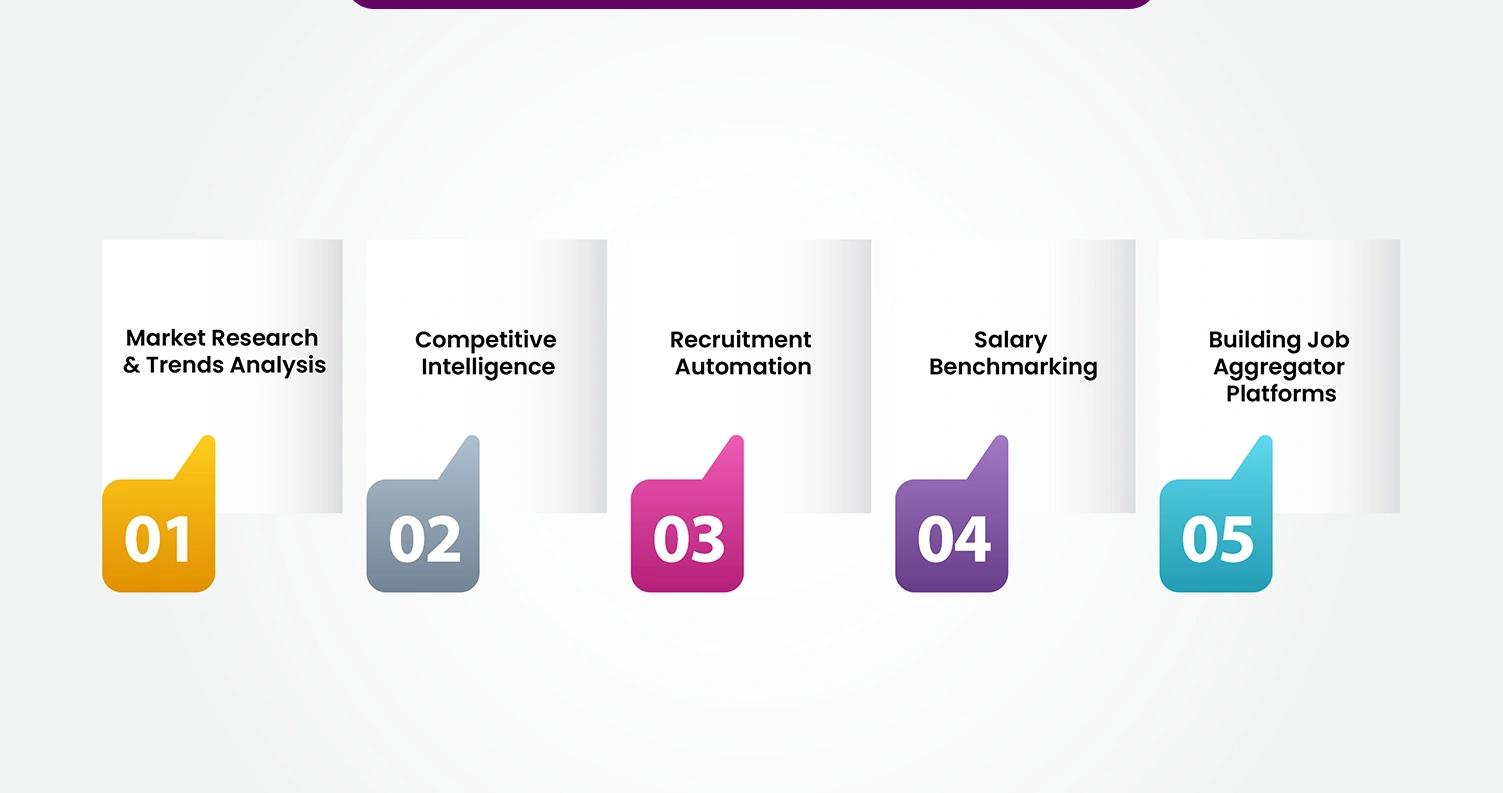

Why Extract Job Posting Data?

Extracting job posting data provides valuable insights for market research, competitive analysis, recruitment optimization, and salary benchmarking, helping businesses and job seekers stay ahead in the evolving job market.

1. Market Research & Trends Analysis

Extract Job Posting Data to identify in-demand skills and job roles, gaining valuable insights into market demands.

Scrape Job Posting Data to analyze job posting trends across various industries, enabling a better understanding of the evolving job market.

2. Competitive Intelligence

Use a Job Posting Data Extractor to track job openings from competitors, helping businesses stay ahead.

Scrape Job Posting Data to study the hiring strategies of top companies, improving your competitive edge in talent acquisition.

3. Recruitment Automation

Automate candidate-job matching by collecting job posting data and streamlining your hiring processes.

Leverage Job Posting Data Collection to develop AI-driven recruitment models that optimize the hiring process and improve candidate matching.

4. Salary Benchmarking

Extract salary insights from job postings for different roles, providing valuable data for compensation analysis.

Compare pay scales across locations, allowing for a comprehensive understanding of salary trends and market expectations.

5. Building Job Aggregator Platforms

Collect job listings from multiple sources through Job Posting Data Scraping, offering a wider selection of opportunities.

Display real-time job updates in one place, creating a seamless experience for job seekers and employers alike.

This approach will enable businesses and developers to maximize the potential of job posting data to drive actionable insights and improve recruitment strategies.

Popular Job Platforms to Scrape Job Posting Data

Popular job platforms are online job boards and career networks where companies post job openings and job seekers search for opportunities. These platforms provide a vast dataset for analyzing job market trends, employer demand, and industry insights.

Key Job Platforms for Scraping Job Posting Data:

- LinkedIn Jobs : A leading professional networking platform featuring job listings from various industries.

- Indeed : A comprehensive job aggregator that compiles postings from numerous companies and career sites.

- Glassdoor : A job board that combines job listings with company reviews, salaries, and interview insights.

- Monster : A global employment platform that connects job seekers with employers across industries.

- ZipRecruiter : An AI-powered job search engine that matches candidates with relevant job postings.

- CareerBuilder : A recruitment platform offering job postings, hiring analytics, and career advice.

- Company Career Pages : Direct hiring pages from employers, providing the latest job opportunities at the source.

Methods to Extract Job Posting Data

Extracting job posting data involves using various techniques to gather job listings from multiple sources, ensuring structured and accessible information for analysis, automation, or business use.

1. Utilizing Official Job APIs

Many job platforms offer official APIs that provide legal and structured access to job posting data. These APIs allow developers to retrieve job listings with filtering options.

Some of the most widely used job APIs include:

- Indeed API : Enables access to job listings with advanced filtering options.

- LinkedIn Jobs API : Requires developer access to fetch job-related data.

- Glassdoor API : Provides insights into job postings, salaries, and company reviews.

- Google Jobs API : Extracts structured job listings directly from search results.

Example API Request:

curl -X GET "https://api.indeed.com/v2/jobs/search?q=software+engineer&location=USA&limit=10" -H "Authorization: Bearer YOUR_API_KEY"

These APIs streamline data extraction, ensuring compliance with platform policies while providing up-to-date job market insights.

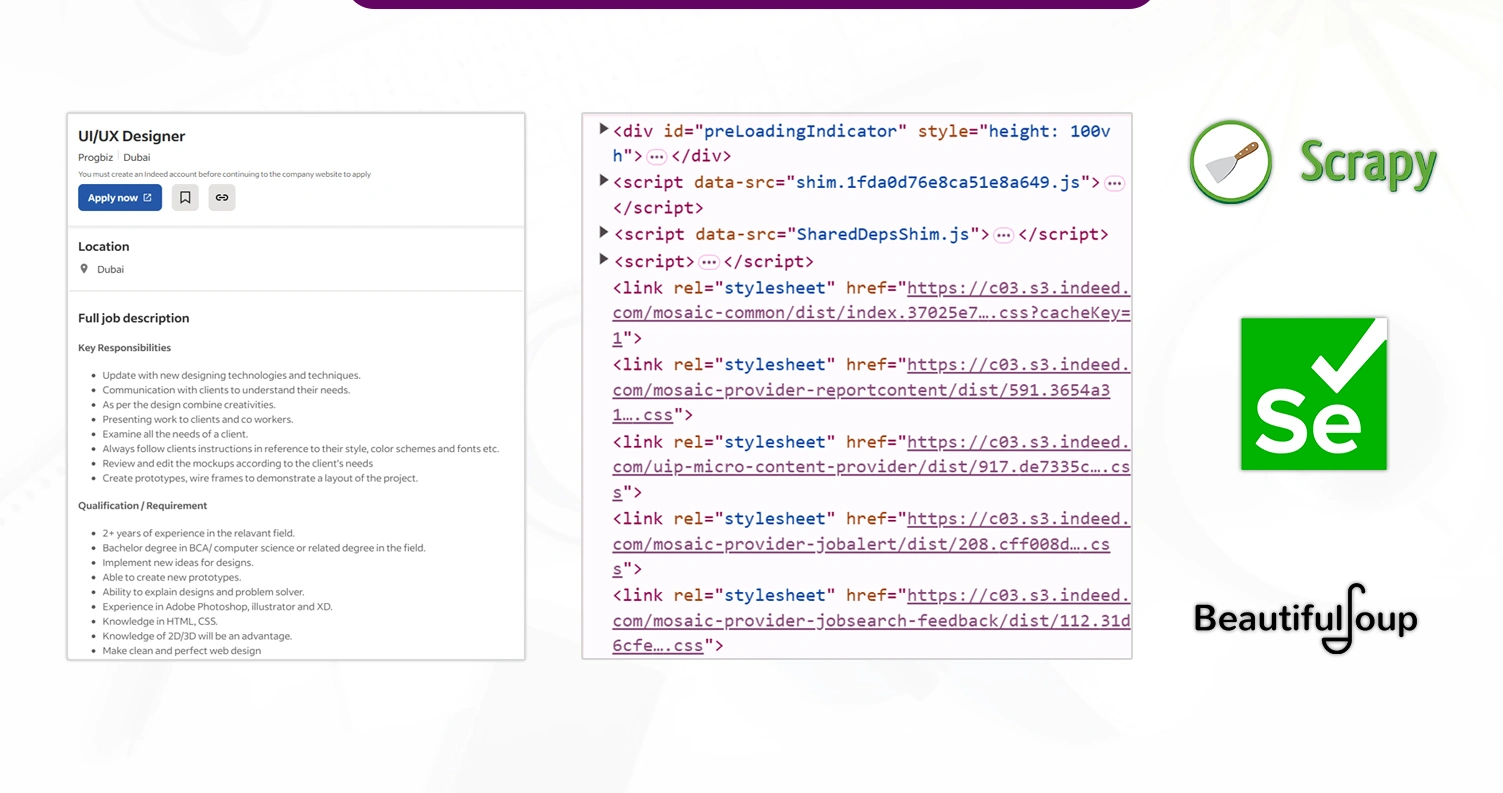

2. Web Scraping Job Posting Data

When an official API is unavailable, web scraping becomes an effective method for extracting job postings from websites.

Tools for Web Scraping:

- Python Libraries : Popular libraries such as Scrapy, Selenium, BeautifulSoup, and Requests help fetch and parse job posting data from structured and unstructured web sources.

- Automation Frameworks : Tools like Puppeteer and Playwright enable automated browsing, interaction, and extraction of dynamic job listings that require JavaScript rendering.

- Headless Browsers : Chrome Headless and Firefox Headless allow seamless data extraction without opening a visible browser window, improving efficiency and speed in large-scale job data scraping.

Each method plays a crucial role in gathering structured job posting data, helping businesses enhance their recruitment strategies and labor market analyses.

Steps to Scrape Job Posting Data

The process of extracting job listings from online platforms by identifying key elements, handling dynamic content, and storing structured data for analysis.

1. Identify Target Website

Begin by selecting job platforms that host relevant job listings. Choose websites that align with your industry, region, or data requirements to ensure meaningful insights.

2. Inspect Page Elements

Utilize browser Developer Tools (F12) to examine the HTML structure of the webpage. Locate key elements such as job title, company name, salary, and job description to determine the most effective scraping approach.

3. Extract Job Details

Once the job listings are identified, extract key details that define each posting:

- Job Title : Capture the exact position name to categorize roles accurately.

- Company Name : Identify the employer to analyze hiring trends.

- Location : Extract job location details, including city, state, or remote options.

- Salary : Retrieve salary information, if available, for market analysis.

- Job Description : Collect job responsibilities, qualifications, and required skills.

- Date Posted : Note the listing date to track job market trends.

4. Handling Dynamic Content

Many job portals rely on JavaScript to load job postings dynamically, making traditional scraping techniques ineffective.

To handle this:

- Use Selenium : For websites that load job listings via JavaScript, Selenium can automate browser interactions, ensuring that all job postings are fully rendered before extraction.

- Implement Time Delays : Adding delays between requests helps mimic human behavior and reduces the risk of getting blocked by anti-bot mechanisms.

5. Storing the Extracted Data

Once the job listings are successfully scraped, organizing and storing the data is crucial for analysis and future use.

Consider the following storage formats:

- CSV : A simple and widely compatible format for structured data storage and easy manipulation in spreadsheet tools.

- JSON : Ideal for storing hierarchical job posting data, particularly for integration with APIs or web applications.

- Databases : SQL or NoSQL databases provide efficient storage and retrieval capabilities, making them suitable for handling large volumes of job market data.

Following these steps ensures accurate and efficient job posting data extraction while adhering to ethical and legal guidelines..

Example Web Scraping Code (Python & BeautifulSoup)

.webp)

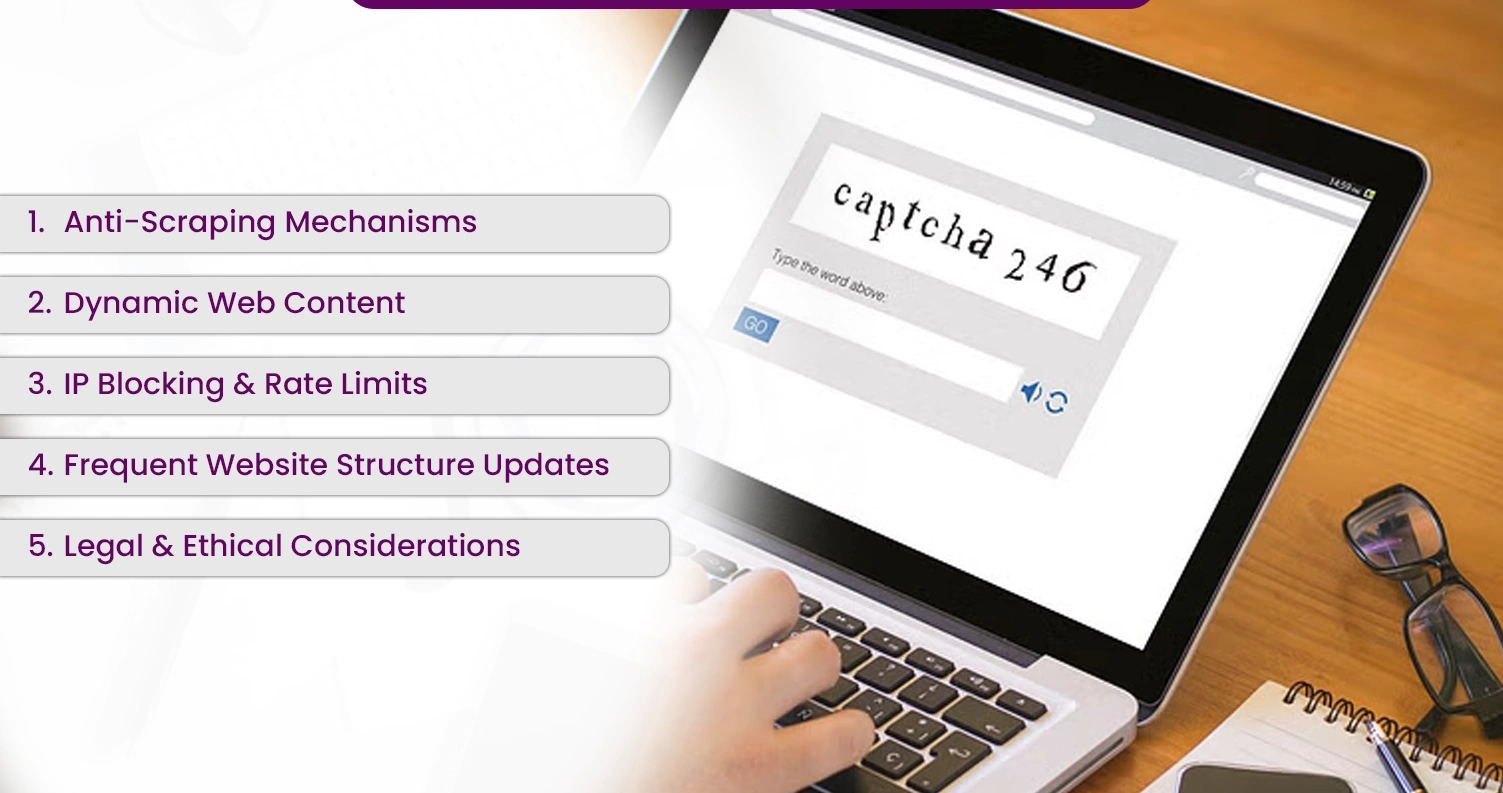

Challenges in Scraping Job Posting Data

Extracting job postings from various websites presents multiple technical, legal, and ethical challenges that require strategic solutions for effective data collection.

1. Anti-Scraping Mechanisms

Websites deploy CAPTCHA systems and advanced bot detection techniques to prevent automated access.

Solution : Implement CAPTCHA solvers or reduce request frequency to mimic human behavior.

2. Dynamic Web Content

Many job boards use JavaScript-rendered pages, making it challenging to extract static HTML content.

Solution : Utilize tools like Selenium or Puppeteer to dynamically load and interact with full-page content.

3. IP Blocking & Rate Limits

Sending too many requests from a single IP address can trigger IP bans and rate limits.

Solution : Employ rotating proxies and user-agent spoofing to distribute requests across multiple IPs.

4. Frequent Website Structure Updates

Websites often modify their HTML structure, breaking scrapers and disrupting data extraction.

Solution : Use adaptable CSS/XPath selectors and implement monitoring systems to detect changes.

5. Legal & Ethical Considerations

Some platforms explicitly forbid web scraping or impose legal restrictions in their Terms of Service.

Solution : Always review robots.txt files and adhere to relevant legal and ethical guidelines.

Best Practices for Job Posting Data Collection

Job Posting Data Collection involves systematically gathering job listings from various online sources while adhering to ethical, legal, and technical best practices to ensure data accuracy and compliance.

- Use Official APIs : Leveraging official APIs ensures structured, legal access to job postings, reducing the risk of restrictions or legal issues.

- Respect Robots.txt : Always check a website’s robots.txt file to understand its scraping permissions and adhere to ethical data collection practices.

- Rotate Proxies & Agents : To prevent IP bans and maintain uninterrupted data collection, mimic real user behavior by rotating proxies and user agents.

- Throttle Request Rate : Introduce time delays between requests to minimize the risk of detection and ensure smooth, sustainable data extraction.

- Store Data Efficiently : Optimize data management using robust storage solutions like MongoDB, PostgreSQL, or cloud-based databases for scalable and secure storage.

Conclusion

Accessing job posting data from diverse platforms is essential for analyzing employment trends, understanding hiring patterns, and identifying industry demands. By leveraging advanced data extraction methods, businesses can stay ahead in the competitive job market.

Web Scraping Job Posting Data enables organizations to collect and analyze job listings at scale, providing deeper insights into recruitment strategies and workforce trends. While APIs offer structured access, web scraping remains a powerful alternative for acquiring real-time job market data.

For companies seeking a seamless solution, Job Posting Data Scraping services us and ensures efficient data extraction from multiple job portals while adhering to ethical and legal compliance standards.

Contact Mobile App Scraping today to automate your job data collection process and gain actionable hiring insights!