How Can You Automate E-Courts Data Scraping with Python for Legal Research?

Introduction

Legal research requires extensive access to case details, court records, and legal precedents. With the digitization of judicial data, E-Courts Data Scraping has become a vital tool for legal professionals, researchers, and law firms to gather and analyze legal information efficiently. Automating this process with Python enhances accuracy, speed, and overall efficiency.

This blog will explore the fundamentals of E-Courts Data Scraping and best practices for E-Courts Portal Data Extraction to ensure seamless and structured data collection. We will also discuss the importance of an optimized Python environment for efficient data processing.

Why Automate E-Courts Data Scraping?

The E-Courts system is a vital resource for legal professionals, providing real-time access to case details, judgments, and hearing schedules. However, manually gathering this information is tedious and highly inefficient. This is where automation becomes essential:

- Efficiency: Automated scripts can rapidly process large volumes of legal data, significantly reducing the time required for data extraction.

- Accuracy: Minimizes human errors, ensuring precise and reliable data collection.

- Scalability: Efficiently handles multiple cases and court records simultaneously, accommodating growing data needs.

- Consistency: Guarantees uniform data extraction across various jurisdictions, maintaining data integrity.

Legal professionals can seamlessly extract structured data by automating E-Courts Website Scraping using Python, enabling more efficient legal research and case analysis.

The Digital Revolution in Legal Research

Imagine standing at the intersection of law and technology, where Python Environment Web Scraping Data is more than just a technical process—it’s a gateway to unparalleled legal insights. Legal professionals leverage automation to revolutionize research and strategy in a data-driven era.

Traditional legal research was time-intensive, requiring weeks or even months to track case histories, cross-reference precedents, and compile statistical data. E-Courts Portal Data Extraction has redefined this approach entirely.

Before adopting Automated Court Case Scraper techniques, he spent weekends buried in court archives. Now, with advanced Python scripts, he extracts comprehensive case details within hours—gaining a competitive edge that was once unimaginable.

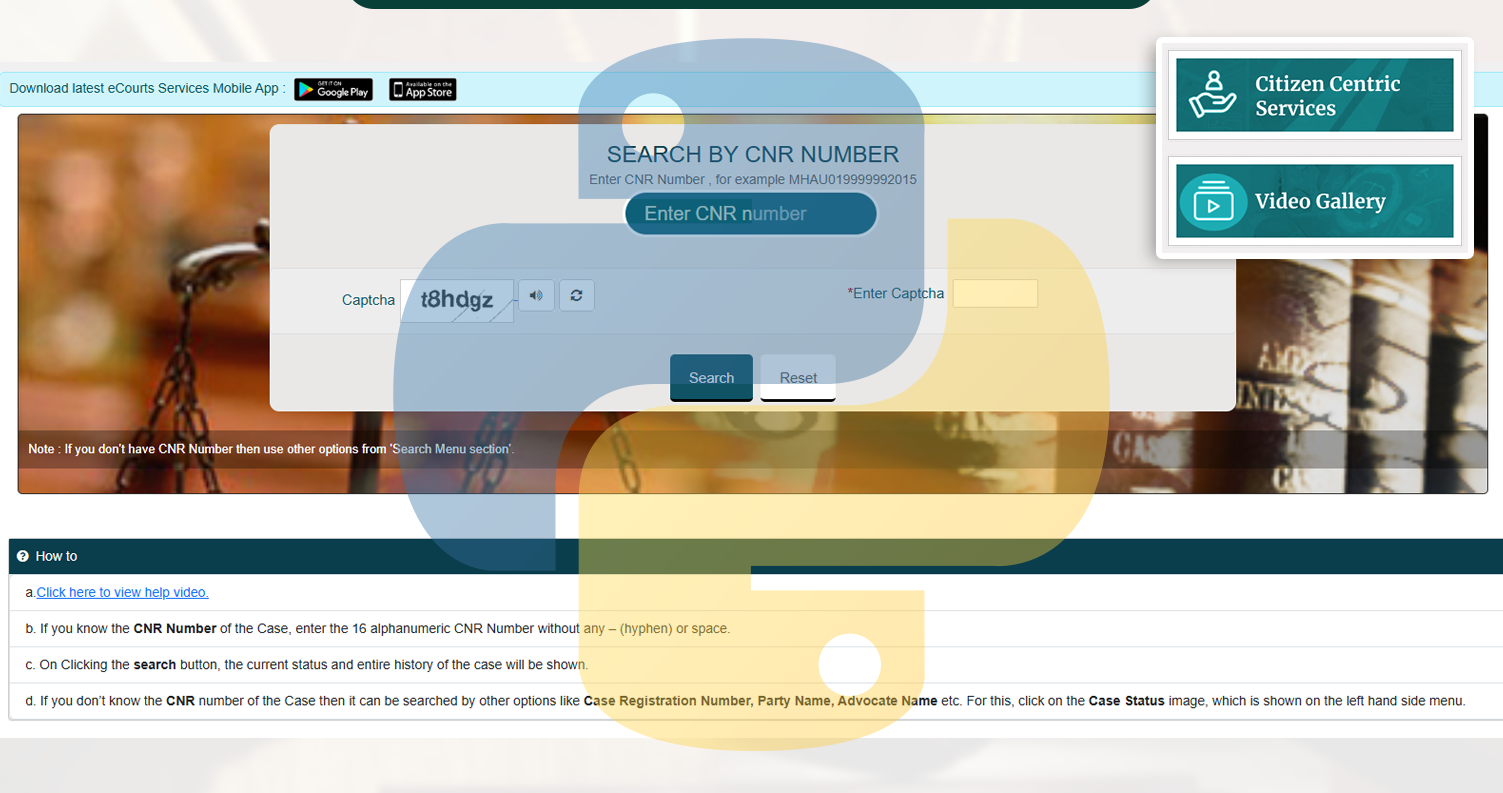

Setting Up the Python Environment for Scraping

Before starting with E-Courts API Scraping, setting up an optimized Python environment is essential to ensure efficient and error-free data extraction. Follow these steps:

Install Required Libraries

Make sure your Python environment includes the necessary web scraping libraries by installing them using the following command:

pip install requests beautifulsoup4 selenium pandasRequests: Fetches HTML pages efficiently.

BeautifulSoup: Parses and extracts structured data from HTML/XML.

Selenium: Automates interactions with dynamic web pages.

Pandas: Structures and organizes extracted data for easy manipulation.

Configure Web Drivers

Selenium requires a browser driver such as Chrome Driver to scrape websites with dynamic content. Configure it as shown below:

from selenium import webdriver

driver = webdriver.Chrome(executable_path="path/to/chromedriver")Properly setting up the Python environment for web scraping can ensure a seamless and efficient data extraction process while minimizing errors.

Steps to Build an Automated Court Case Scraper in Python

An Automated Court Case Scraper in Python is a script or application designed to extract legal case information from court portals systematically. This automation streamlines data collection for legal professionals, researchers, and analysts by retrieving case details efficiently and accurately.

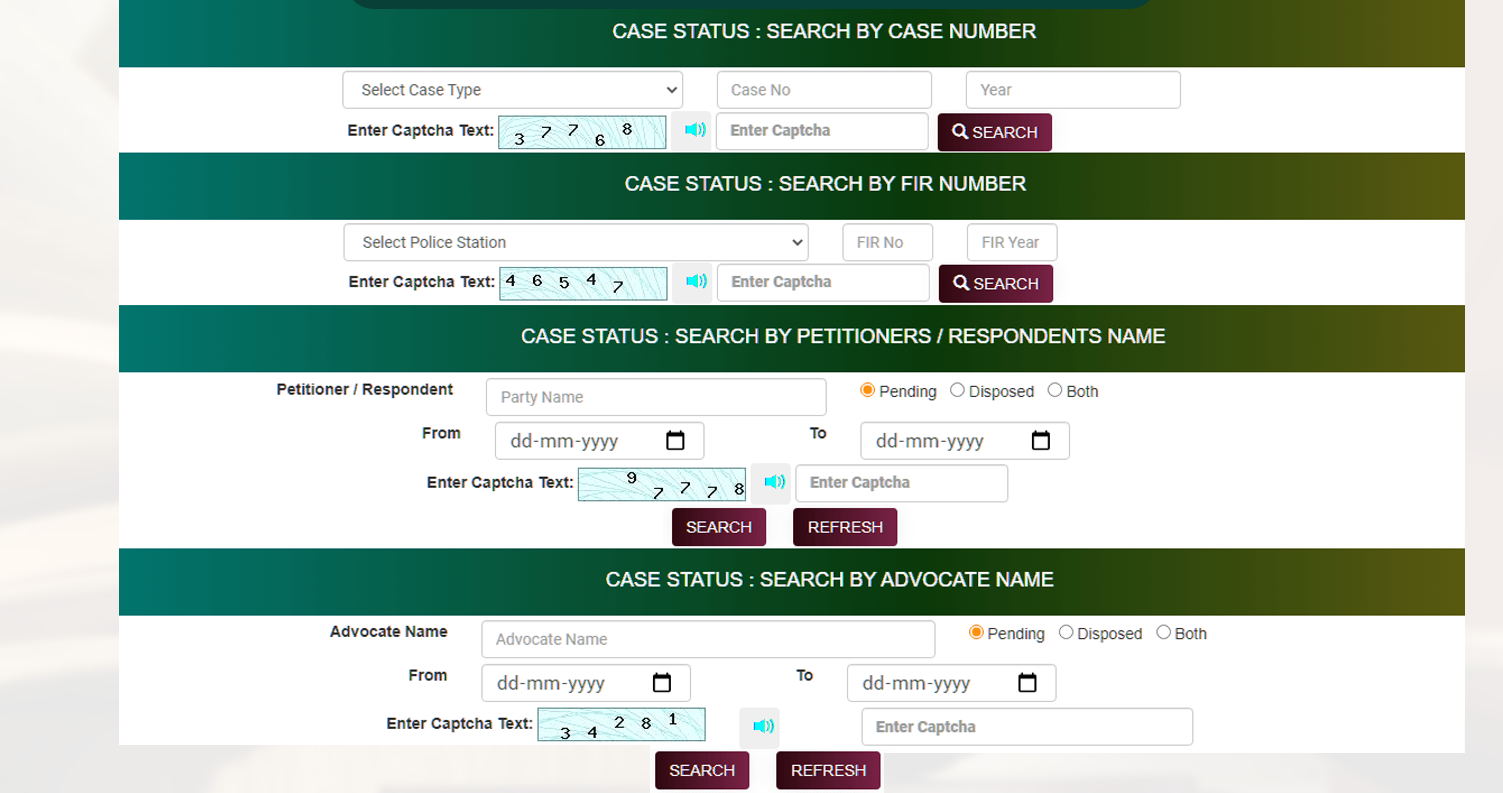

Identify Target Data

To develop an effective court case scraper, the first step is to analyze and understand the structure of the targeted court portals. This involves identifying the key data points that need to be extracted, which typically include:

- Case Number: A unique identifier is assigned to each case.

- Party Names: Names of individuals or entities involved in the case.

- Case Status: The current state of the case (e.g., pending, resolved, dismissed).

- Court Decisions: Rulings, judgments, or verdicts issued by the court.

A thorough understanding of the data format and retrieval methods is crucial for ensuring accurate and efficient scraping.

Request Data

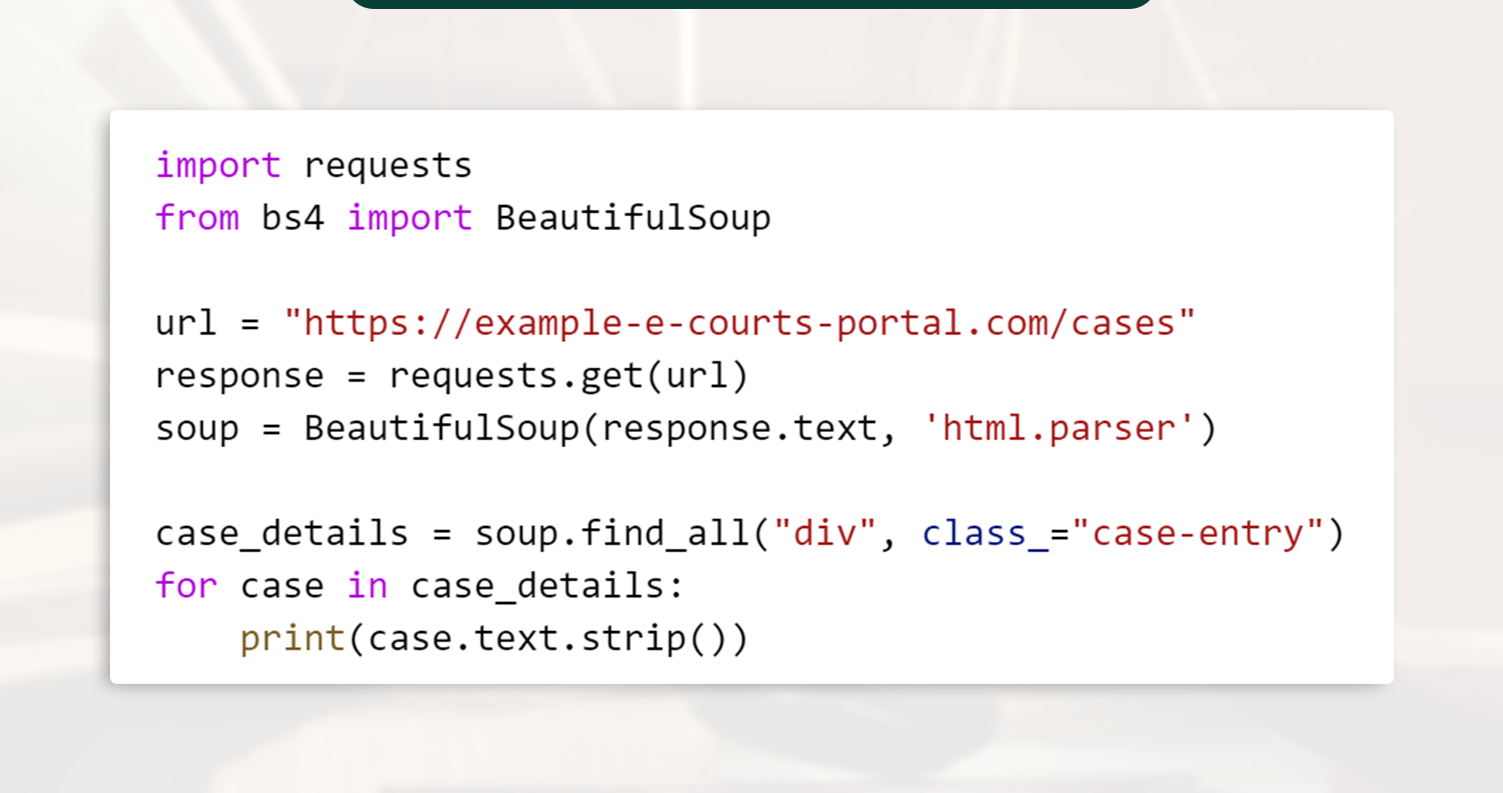

Utilize Python's requests module to send HTTP requests and retrieve information.

This approach enables efficient extraction of E-Courts Data from structured HTML elements.

Handle Dynamic Content with Selenium

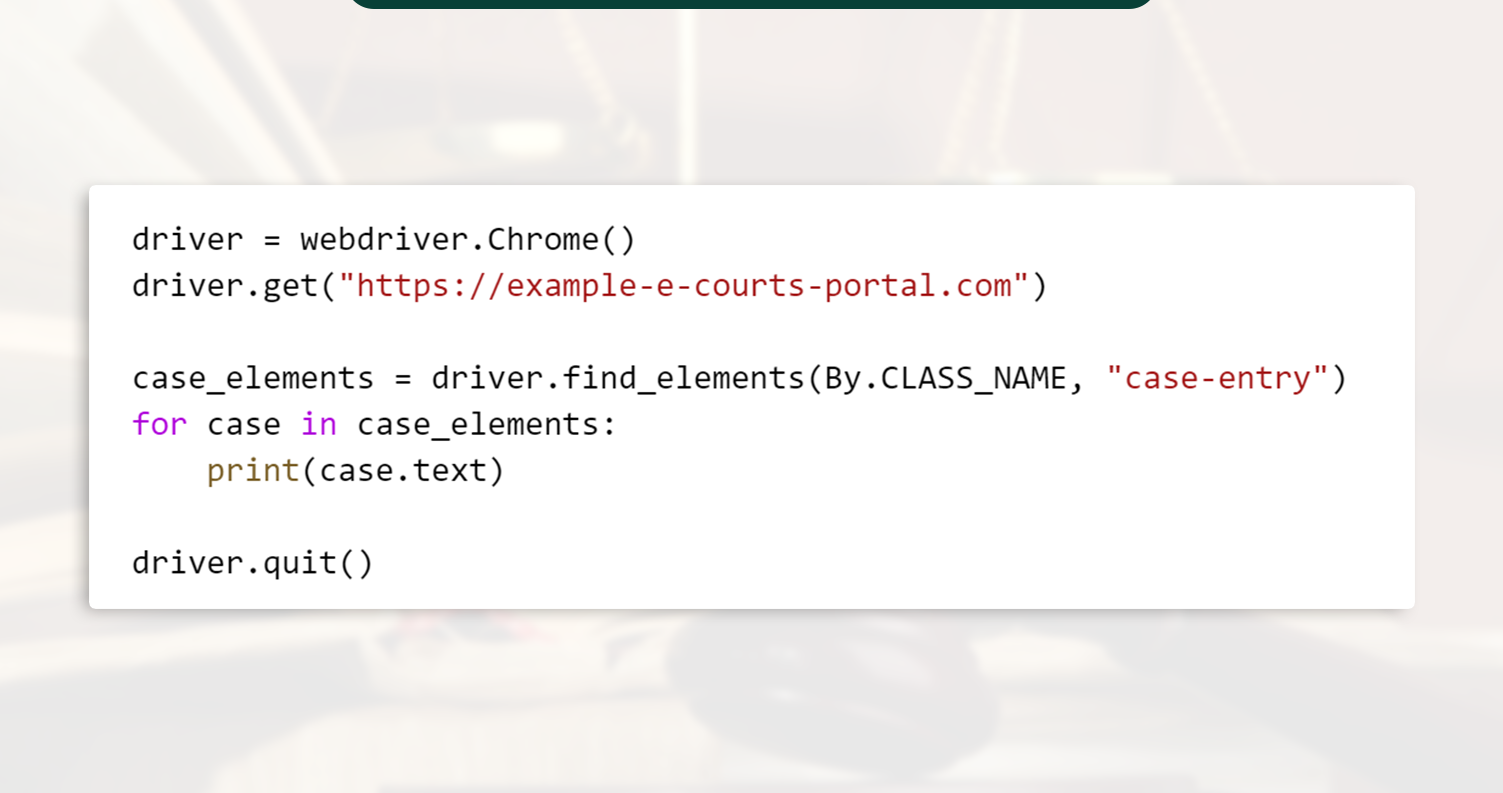

Some court portals use JavaScript to load data. Selenium automates browser actions:

Using Python E-Courts Scraper, you can navigate and extract dynamic data efficiently.

Leveraging E-Courts API Scraping

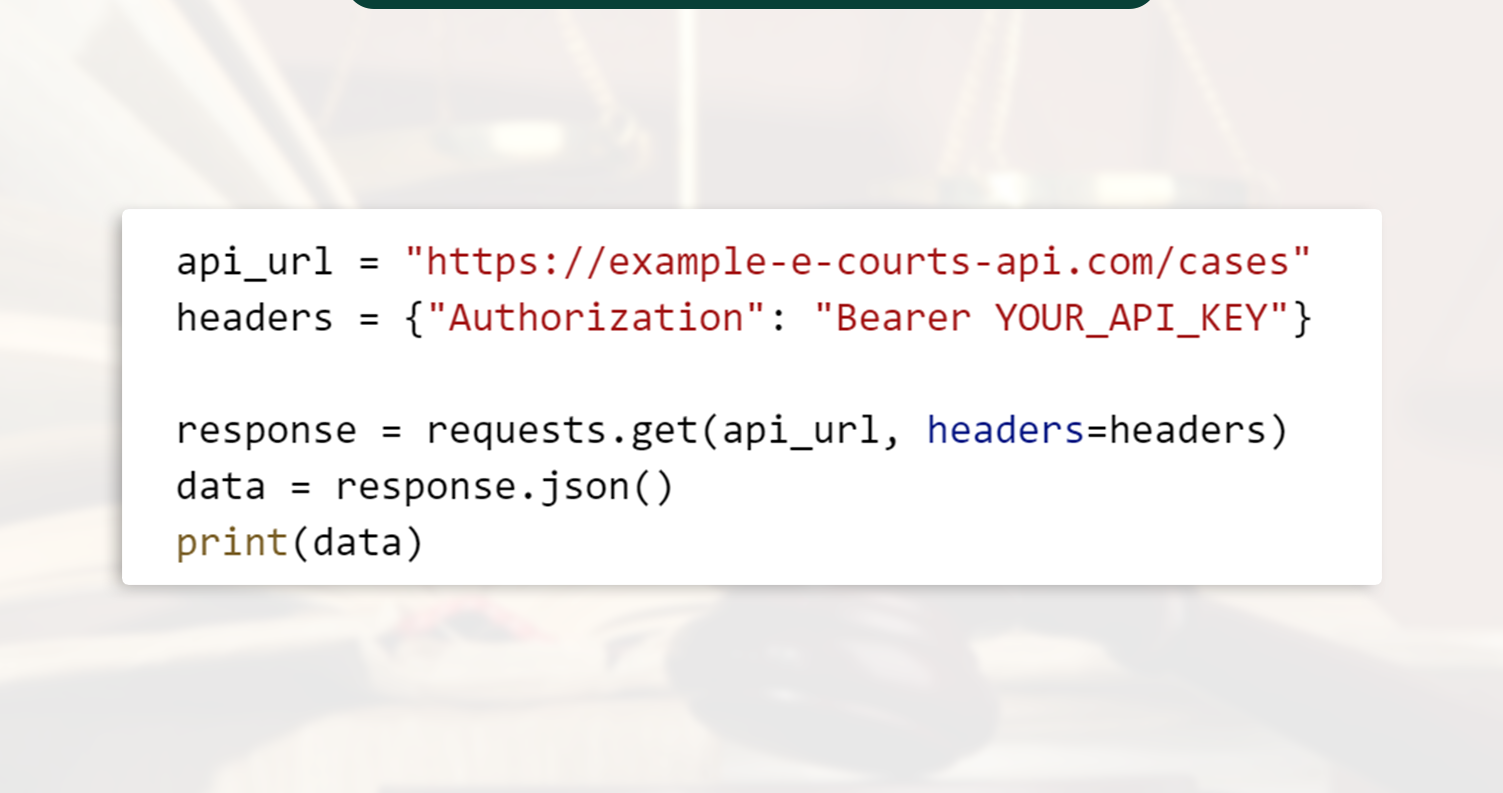

Some jurisdictions provide APIs for structured data extraction. You can interact with the API using Python:

With E-Courts API Scraping, legal researchers can pull data without violating website scraping policies.

Best Practices for E-Courts Website Scraping

Following best practices to ensure efficiency, compliance, and sustainability is crucial when implementing a Python E-Courts Scraper.

Below are key guidelines to enhance your scraping process:

- Respect Robots.txt: Always review and adhere to the website’s robots.txt file and terms of use to ensure legal and ethical scraping.

- Use Rotating Proxies: Implement rotating proxies to distribute requests and prevent IP bans, especially when scraping large volumes of data.

- Implement Delay Between Requests: Introduce a delay between HTTP requests to prevent overwhelming the server and reduce the risk of detection.

- Store Data Efficiently: Optimize data storage using robust databases such as MySQL or MongoDB, ensuring seamless retrieval and scalability.

- Regularly Update Your Scraper: Websites frequently change their structure, so updating your scraping scripts for continued compatibility is essential.

By following these best practices, you can enhance the reliability and effectiveness of your E-Courts Website Scraping operations.

How Mobile App Scraping Can Help You?

We specialize in developing cutting-edge solutions for E-Courts Website Scraping and legal data extraction. Our expertise enables law firms, researchers, and businesses to access structured legal data efficiently.

Here’s how our services can support your needs:

- Automated Court Case Scraper designed for large-scale legal data retrieval, streamlining case monitoring and research.

- Custom Python E-Courts Scraper tailored to meet your unique legal data requirements with precision and efficiency.

- API integration for seamless access to E-Courts Web Datasets, enhancing data accessibility and usability.

- Strict adherence to legal data standards, ensuring compliance and preventing unauthorized access.

- Real-time data updates to facilitate accurate and up-to-date case tracking for legal professionals.

If you require to Scrape E-Commerce Data, our team is ready to assist you in building a scalable and efficient scraper that meets your requirements. Get in touch to explore how we can support your legal data needs!

Conclusion

Enhancing legal research efficiency with E-Courts Data Scraping is now easier than ever with Python automation. Legal professionals can streamline data collection seamlessly by setting up an optimized scraping environment, leveraging APIs, and following best practices.

Need expert support? We specialize in E-Courts Website Scraping and offer tailored solutions for law firms, legal researchers, and organizations to automate court data retrieval.

Contact Mobile App Scraping today to explore how we can develop a custom Automated Court Case Scraper to meet your needs!