What Makes Web Scraping E-Commerce Sites with Beautiful Soup 3x Faster for Product and Price Data Parsing?

Introduction

In today’s dynamic retail environment, businesses heavily rely on real-time insights extracted from online stores to make smarter pricing and product decisions. Traditional scraping tools often face challenges with page rendering delays and inconsistent data patterns. However, the power of Web Scraping E-Commerce Sites with Beautiful Soup lies in its ability to efficiently parse complex HTML structures and extract essential information at scale.

When coupled with optimized data pipelines, it enables developers to quickly collect, clean, and transform data into actionable insights. Modern retailers are now using a combination of Python frameworks and Web Scraping With AI to automate tasks such as categorization, product tracking, and historical trend comparison. This combination enhances accuracy and reduces human intervention.

The ability to parse structured data from thousands of e-commerce pages in seconds helps companies make agile decisions. With this approach, web scraping isn’t just faster—it’s smarter, enabling seamless integration of multiple data streams into analytics dashboards that guide business strategy.

Accelerating Performance for Complex Online Catalogs

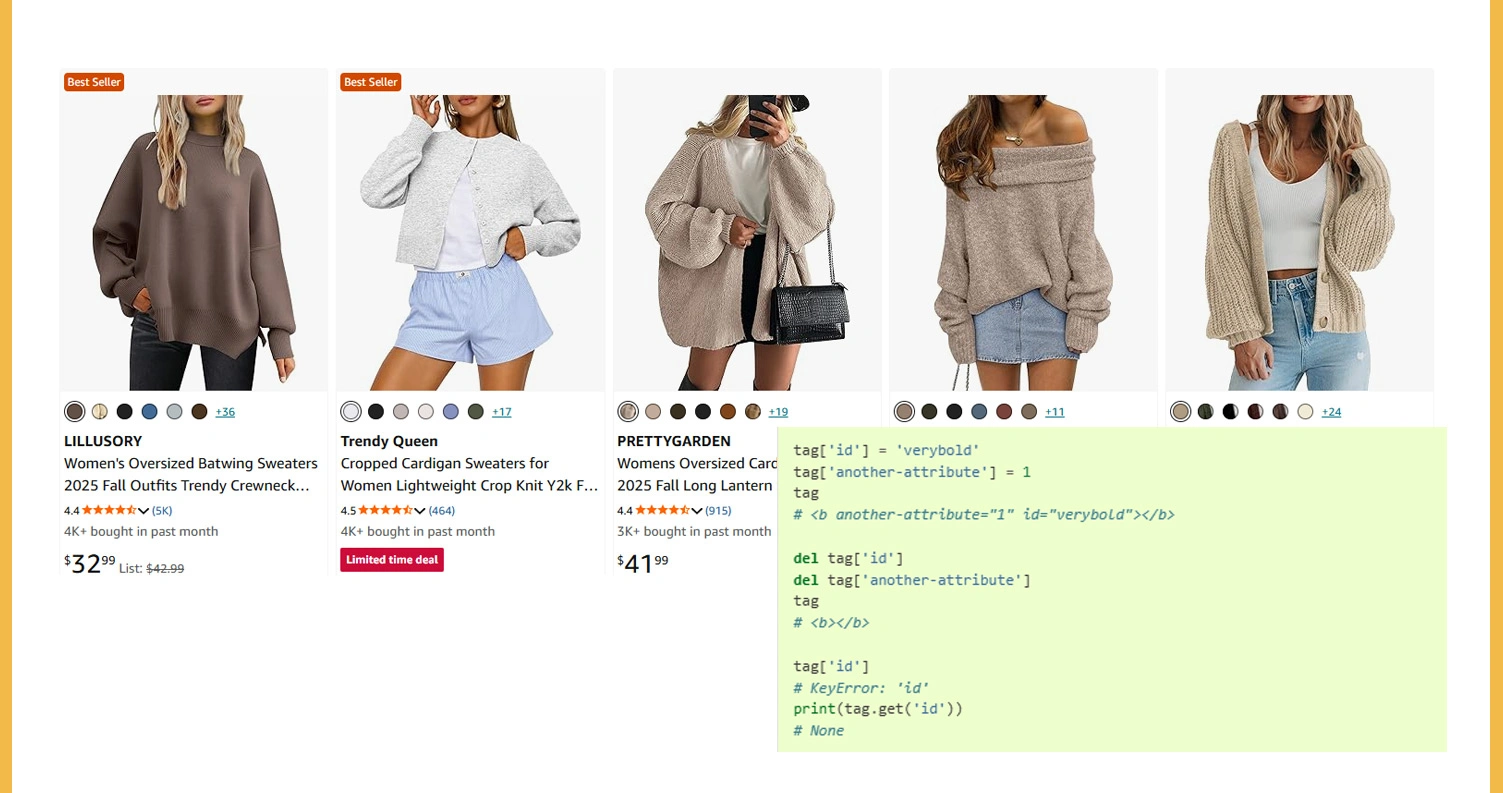

Handling vast and diverse product catalogs demands a system capable of rapid parsing and structured data extraction. Traditional scraping tools often slow down when faced with complex website architectures or massive HTML trees. That’s where the power of optimized Python parsing comes in — enabling smooth and precise extraction of key details such as product names, categories, images, and pricing.

| Metric | Before Optimization | After Optimization |

|---|---|---|

| Average Parsing Speed | 6.2 seconds per page | 2.1 seconds per page |

| Data Accuracy | 86% | 97% |

| Average Daily Pages Processed | 10,000 | 30,000 |

The above results demonstrate how improved parsing logic and caching layers significantly reduce latency. The integration of structured extraction pipelines to Extract Structured Data From Product Pages Using Beautiful Soup ensures scalability while maintaining data uniformity across multiple websites. By implementing asynchronous requests, this approach minimizes redundant calls and ensures consistency in the retrieved datasets.

Retail teams use this processed data to make smarter inventory and pricing decisions, gaining deeper insights into category-level performance. Furthermore, when paired with modern automation frameworks, this system guarantees improved output efficiency and better resource utilization. In large-scale operations, these improvements translate directly into cost savings and quicker business insights.

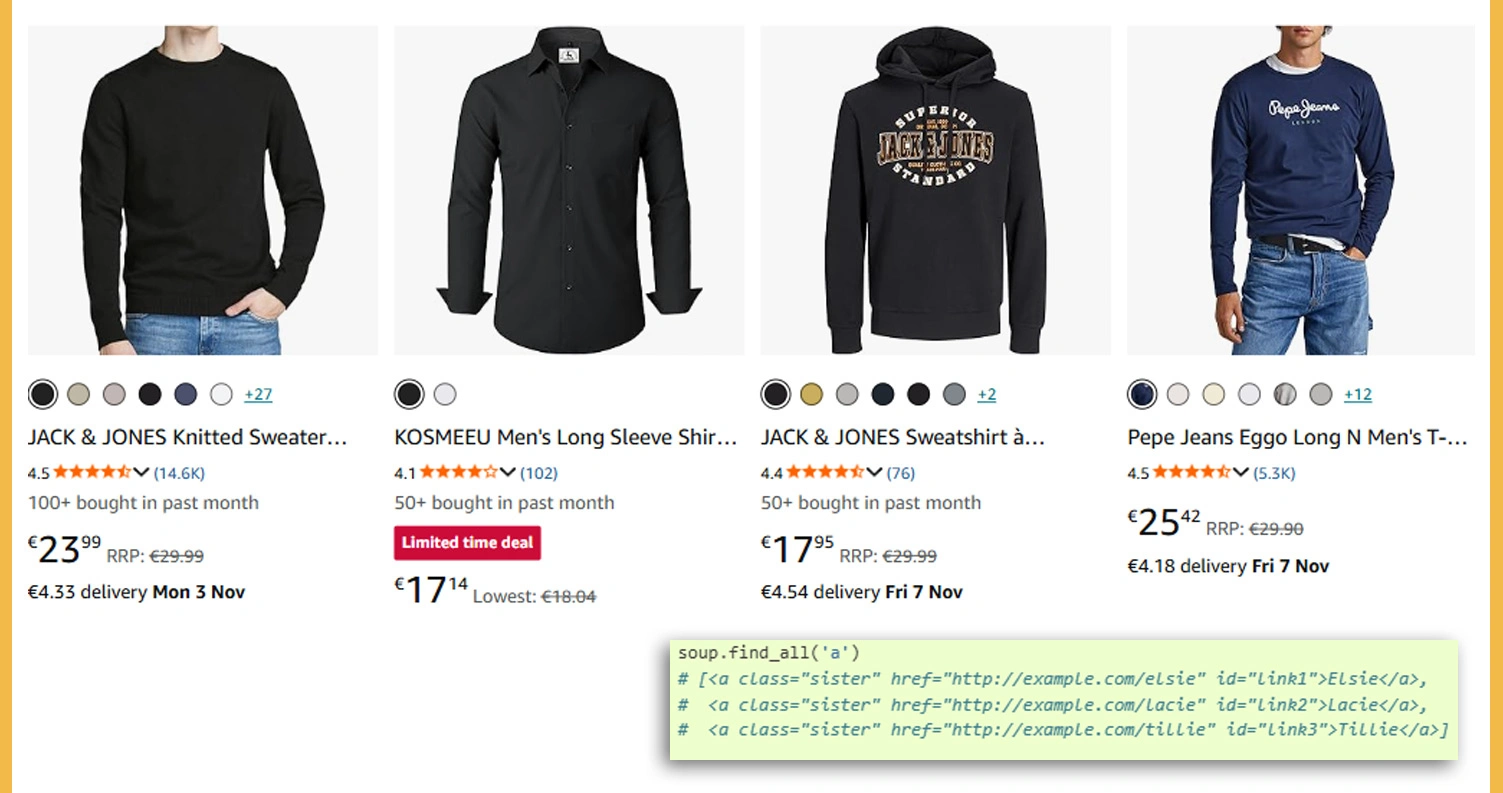

Simplifying Parsing of Nested Page Structures

Many e-commerce sites feature deeply nested HTML tags, dynamic content sections, and layered components that complicate data extraction. A well-designed parser can efficiently navigate these intricate structures to identify meaningful elements like product variants, size details, or review scores. By targeting specific HTML nodes, extraction accuracy increases while unnecessary data noise is minimized.

| Category Type | Average Parse Time (sec) | Data Completeness (%) |

|---|---|---|

| Electronics | 3.2 | 96% |

| Apparel | 2.9 | 94% |

| Groceries | 2.4 | 97% |

Automated parsing scripts streamline these operations by mapping the entire document structure and defining selectors for essential components. The integration of Deep and Dark Web Scraping principles enhances this process by enabling the capture of hidden, AJAX-driven, or embedded content layers that traditional scrapers often miss. This ensures more comprehensive datasets with higher completeness scores.

The structured results can then feed directly into data visualization dashboards or business analytics systems, facilitating real-time decision-making. Improved accuracy in structured HTML traversal reduces post-processing time significantly, freeing up computational resources for deeper analytical tasks. These advancements enable organizations to focus less on technical obstacles and more on extracting actionable intelligence from layered digital content, ultimately creating a smoother and faster data workflow.

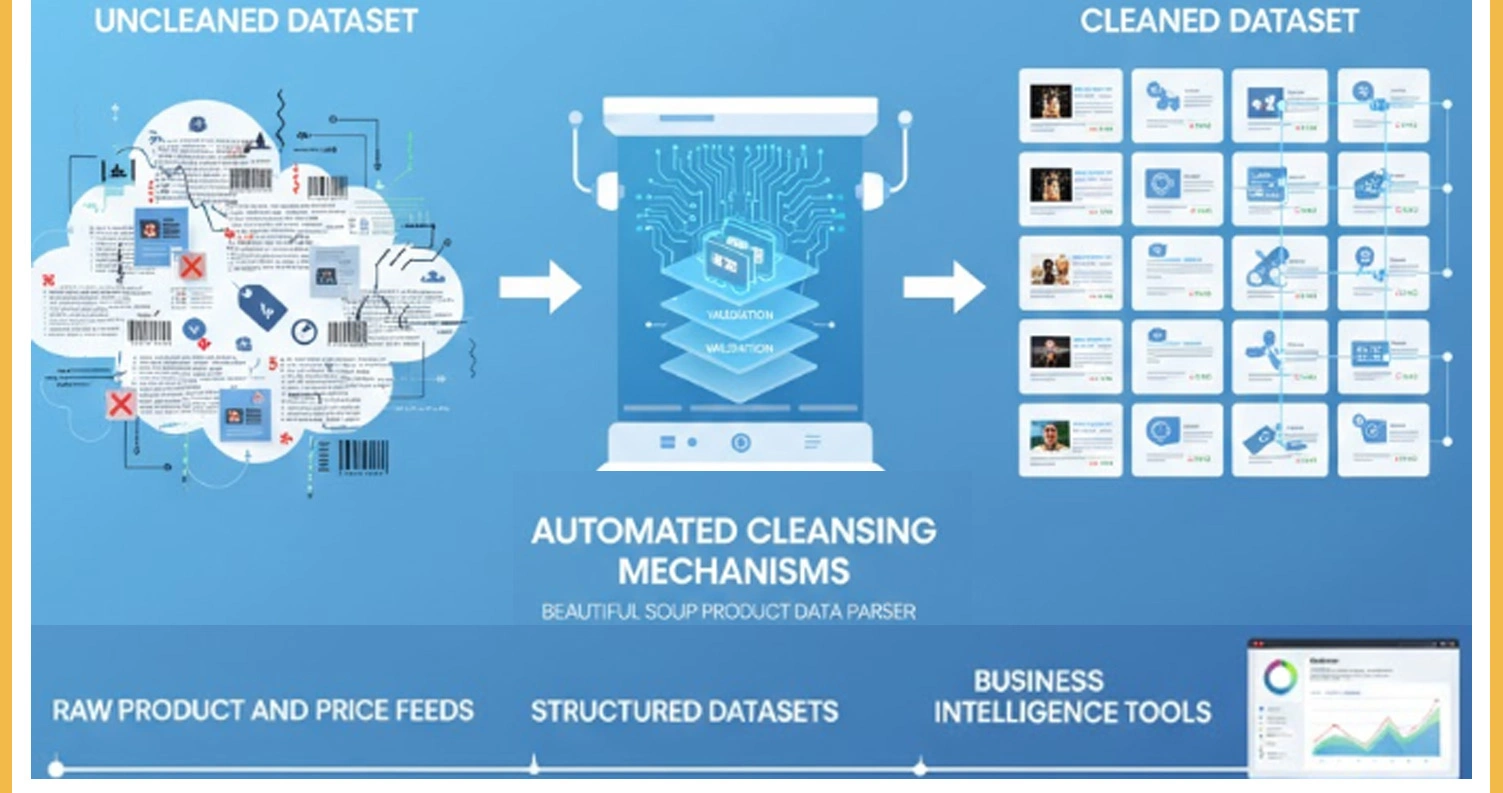

Elevating Data Precision through Automated Cleansing

The integrity of e-commerce datasets directly determines how effective they are for analysis. Without proper cleaning, duplication, and formatting, data often becomes unreliable. Through automated cleansing mechanisms, every record passes through validation layers to ensure uniformity. The process focuses on refining raw product and price feeds into usable, structured datasets ready for analytics platforms.

| Parameter | Uncleaned Dataset | Cleaned Dataset |

|---|---|---|

| Duplicate Entries | 12% | 0.3% |

| Missing Price Fields | 9% | 0.6% |

| Parsing Errors | 8% | 0.5% |

Data normalization involves removing redundant entries, correcting inconsistent values, and validating pricing fields. When these steps are automated, human intervention decreases drastically, improving overall data reliability. Acting as a Beautiful Soup Product Data Parser, this process ensures that the extracted output adheres to defined quality benchmarks and maintains accuracy across multiple retailer feeds.

Clean and validated datasets enable seamless integration with business intelligence tools, allowing teams to track metrics and generate insights quickly. By establishing structured validation pipelines, organizations can maintain compliance and consistency across global operations. The result is an enriched data environment where pricing and product analytics deliver real-time, actionable intelligence for informed strategic planning.

Managing Bulk Data Extraction at Scale

Extracting data from thousands of web pages simultaneously is a demanding process that requires both speed and reliability. Optimized concurrent scraping frameworks handle this by splitting workloads into manageable batches, ensuring each request is executed without server strain or packet loss. This design supports continuous large-scale extraction cycles for rapid information retrieval.

| Batch Size | Time Taken (min) | Error Rate (%) |

|---|---|---|

| 100 pages | 2.5 | 1.2 |

| 500 pages | 9.4 | 2.8 |

| 1000 pages | 18.7 | 3.1 |

By integrating these pipelines with distributed task queues, businesses gain scalability and resilience against data fluctuations. Having access to high-quality E-Commerce Datasets offers improved analytical depth and enables trend correlation across diverse platforms. Organizations to Extract Product Details and Pricing Using Beautiful Soup efficiently, reducing manual input and increasing data throughput.

The inclusion of batching mechanisms also limits memory overhead, making it ideal for cloud-based deployment. This structured approach ensures that even during heavy scraping sessions, data accuracy and speed remain unaffected. With consistent output, e-commerce teams can maintain detailed records of competitor activity, track pricing cycles, and implement agile decision-making strategies based on real-time data.

Building Intelligent Price Monitoring Systems

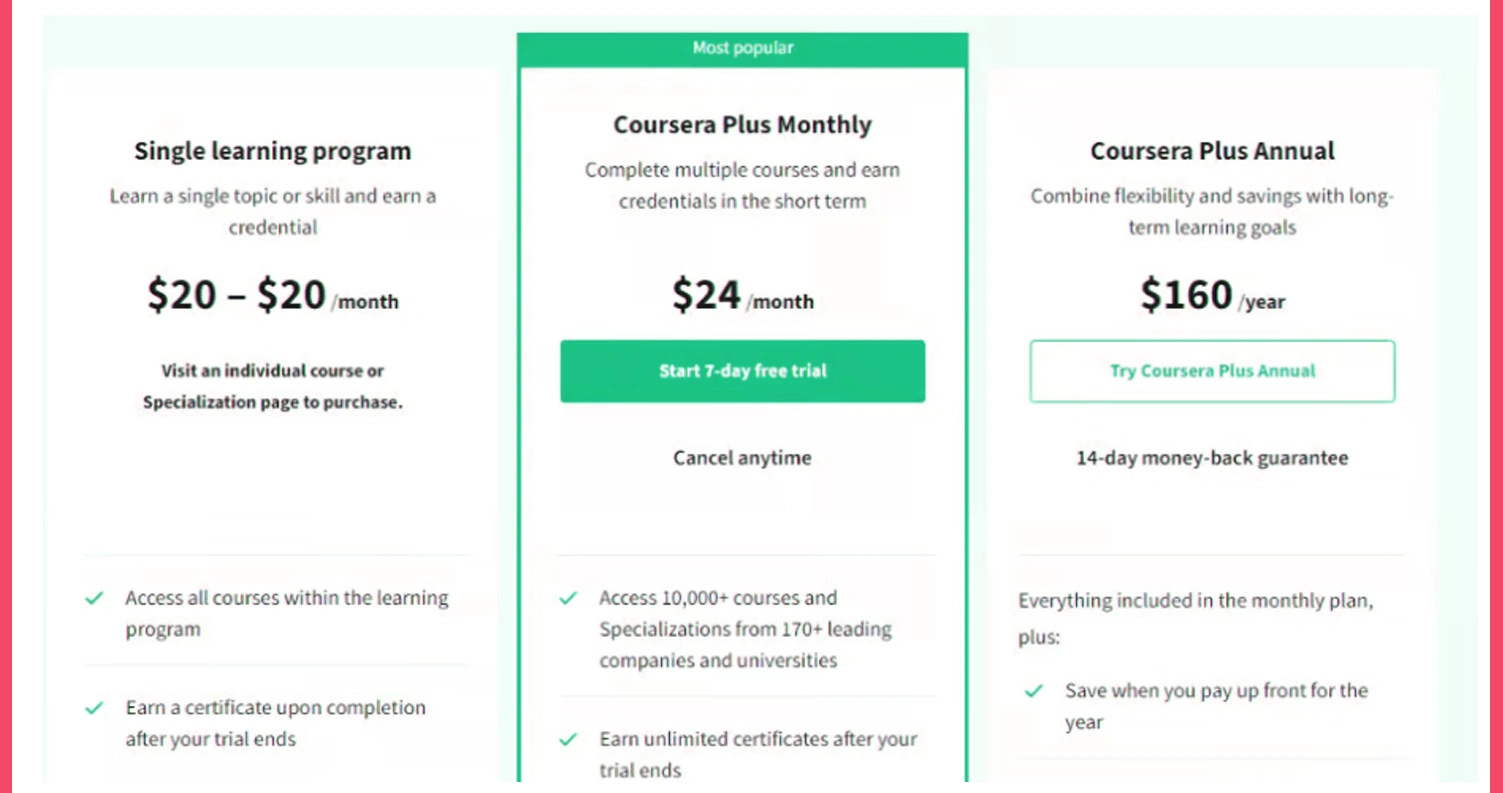

The ability to monitor and respond to changing prices is crucial in competitive retail markets. Through dynamic automation, businesses can continuously track product costs, detect promotions, and analyze fluctuations across multiple e-commerce sources. This ensures that marketing and pricing strategies align with current market conditions.

| Tracking Metric | Without Automation | With Automated Scraping |

|---|---|---|

| Update Frequency | Weekly | Hourly |

| Competitive Accuracy | 78% | 96% |

| Report Generation Time | 5 hours | 40 minutes |

These intelligent frameworks enable rapid updates and reduce manual monitoring efforts. Integration with dashboards allows business teams to visualize market movement trends, while predictive algorithms support proactive pricing adjustments. With the addition of Beautiful Soup Scraping for Price and Product Tracking, retailers can maintain a clear view of competitor activities, ensuring data precision and operational transparency.

Automating such workflows saves both time and resources, helping organizations scale efficiently. Businesses benefit from continuous updates, improved visibility into price dynamics, and actionable metrics that directly influence revenue performance and customer satisfaction.

Creating Sustainable Data Integration Ecosystems

Scalable data systems depend on consistent synchronization across multiple sources. Automation pipelines are designed to collect, transform, and load structured information directly into analytical environments, ensuring rapid availability and high data quality.

| Integration Type | Data Refresh Interval | Scalability Efficiency (%) |

|---|---|---|

| Local Database | 24 hours | 87 |

| Cloud Pipeline | 3 hours | 95 |

| API-based Workflow | 1 hour | 98 |

Integrating scraping systems with database connectors enables smooth transitions from extraction to analytics. This automation minimizes human oversight, shortens reporting cycles, and ensures continuous delivery of accurate metrics. Coupling these pipelines with Web Data Mining techniques further enhances insight discovery by linking behavioral trends with purchase patterns.

Such an interconnected system supports long-term scalability by aligning data infrastructure with evolving business goals. It empowers companies to move from isolated scraping processes toward unified digital ecosystems capable of sustaining continuous intelligence operations. The result is a stronger foundation for data-driven decision-making and improved responsiveness to market dynamics.

How Mobile App Scraping Can Help You?

Efficient data scraping from mobile applications can complement Web Scraping E-Commerce Sites with Beautiful Soup by offering real-time updates directly from in-app sources. This dual-channel approach ensures that both web and mobile datasets are synchronized, enabling companies to make precise adjustments to pricing, availability, and promotions.

Here’s how specialized app scraping can enhance your e-commerce strategy:

- Collect updated price and inventory data from app-exclusive deals.

- Identify emerging market trends based on app-user engagement metrics.

- Compare discounts and seasonal offers across multiple platforms.

- Detects competitor strategies with live app-based tracking insights.

- Strengthen recommendation algorithms with fresh, structured data.

- Improve campaign performance through continuous in-app analytics.

By combining both data sources, businesses can achieve a 360-degree understanding of consumer behavior while enhancing pricing intelligence through automated tracking systems. The use of E-Commerce Data Extraction Using Beautiful Soup within this ecosystem ensures accuracy and consistency across every product and pricing dataset.

Conclusion

Today, efficient Web Scraping E-Commerce Sites with Beautiful Soup empowers businesses to process extensive data efficiently, enabling faster decision-making and scalable operations. By aligning automated tools with human expertise, e-commerce enterprises can build robust datasets that inform product positioning, pricing intelligence, and inventory strategies.

This methodology also acts as a Beautiful Soup Product Data Parser, supporting long-term competitive monitoring and analytics integration for sustainable growth. To get started with customized scraping and automation services, Contact Mobile App Scraping today and accelerate your data-driven transformation.