.png)

How does web scraping Starbucks with lxml work for accurate menu data extraction?

Introduction

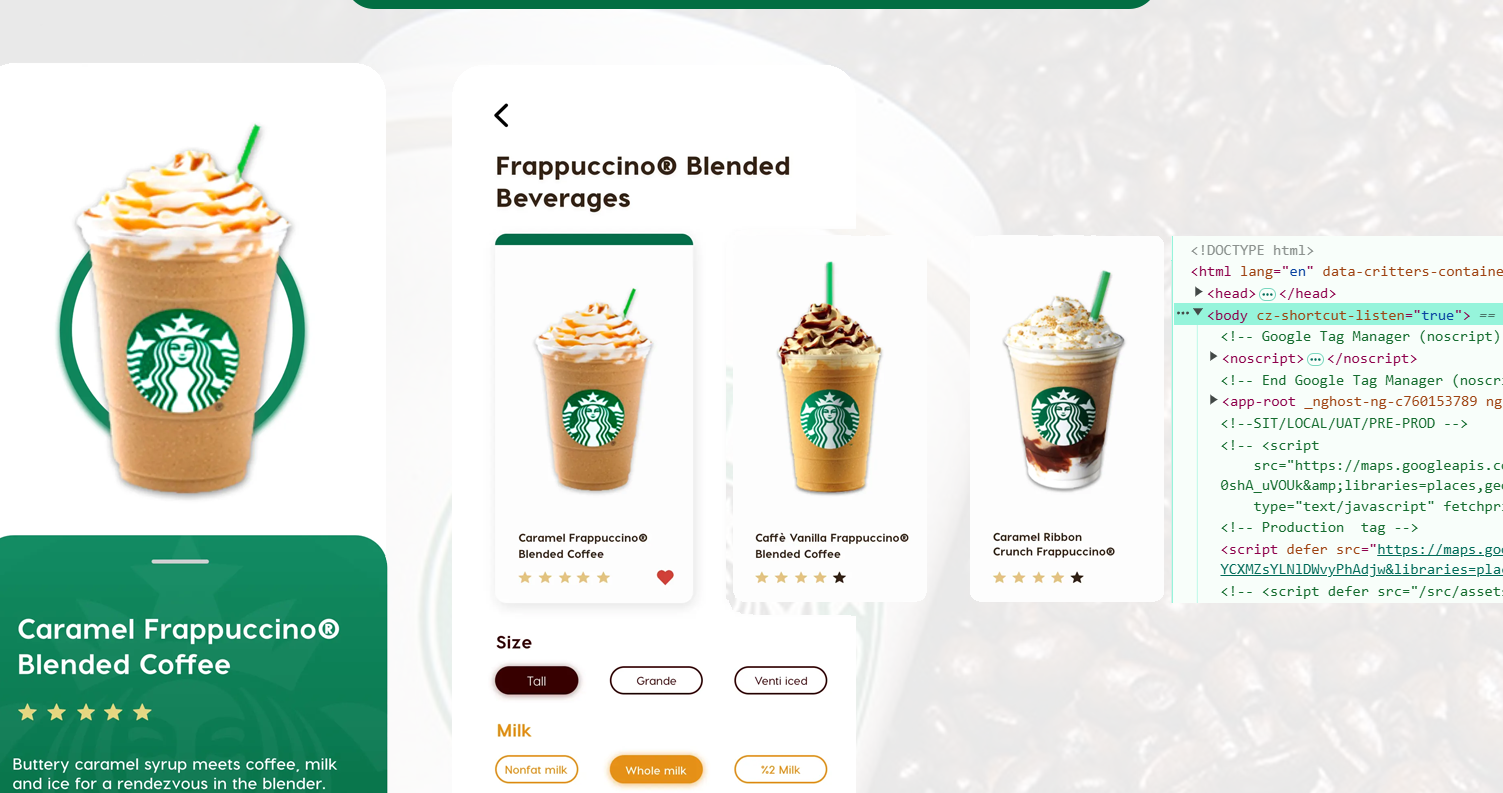

Web Scraping Starbucks with lxml allows you to extract menu data efficiently and accurately. If you aim to gather structured and precise menu details from Starbucks' website, Python’s lxml library offers a robust solution for seamless and high-performance web scraping Starbucks. Whether you need pricing, availability, or ingredient details, lxml simplifies the process while ensuring efficiency.

In this blog, we'll delve into the Starbucks menu scraping process, highlighting the benefits of Python web scraping Starbucks and how lxml helps extract Starbucks data with precision. Additionally, we’ll explore Starbucks API data and compare it with web scraping so you can determine the most suitable method for your needs.

Why Scrape Starbucks Menu Data?

Starbucks provides a diverse menu that can vary depending on location, time, and ongoing promotions. To meet various business needs, analysts and developers often require accurate and structured Starbucks store data extraction for several use cases, including:

- Competitor Analysis: Scraping Starbucks pricing data to track and analyze market trends.

- Consumer Insights: Gathering data on popular beverages and food preferences to understand consumer behavior.

- Nutritional Research: Extracting detailed calorie and ingredient information for health-conscious analysis

- E-commerce & Delivery Aggregation: Ensuring Starbucks menu listings are consistently accurate across third-party platforms and delivery apps.

While the Starbucks API data may offer some of this data, APIs are often subject to restrictions, requiring authentication or providing limited information. In such cases, Starbucks website data scraping becomes essential, enabling businesses to gather a more complete and detailed dataset efficiently.

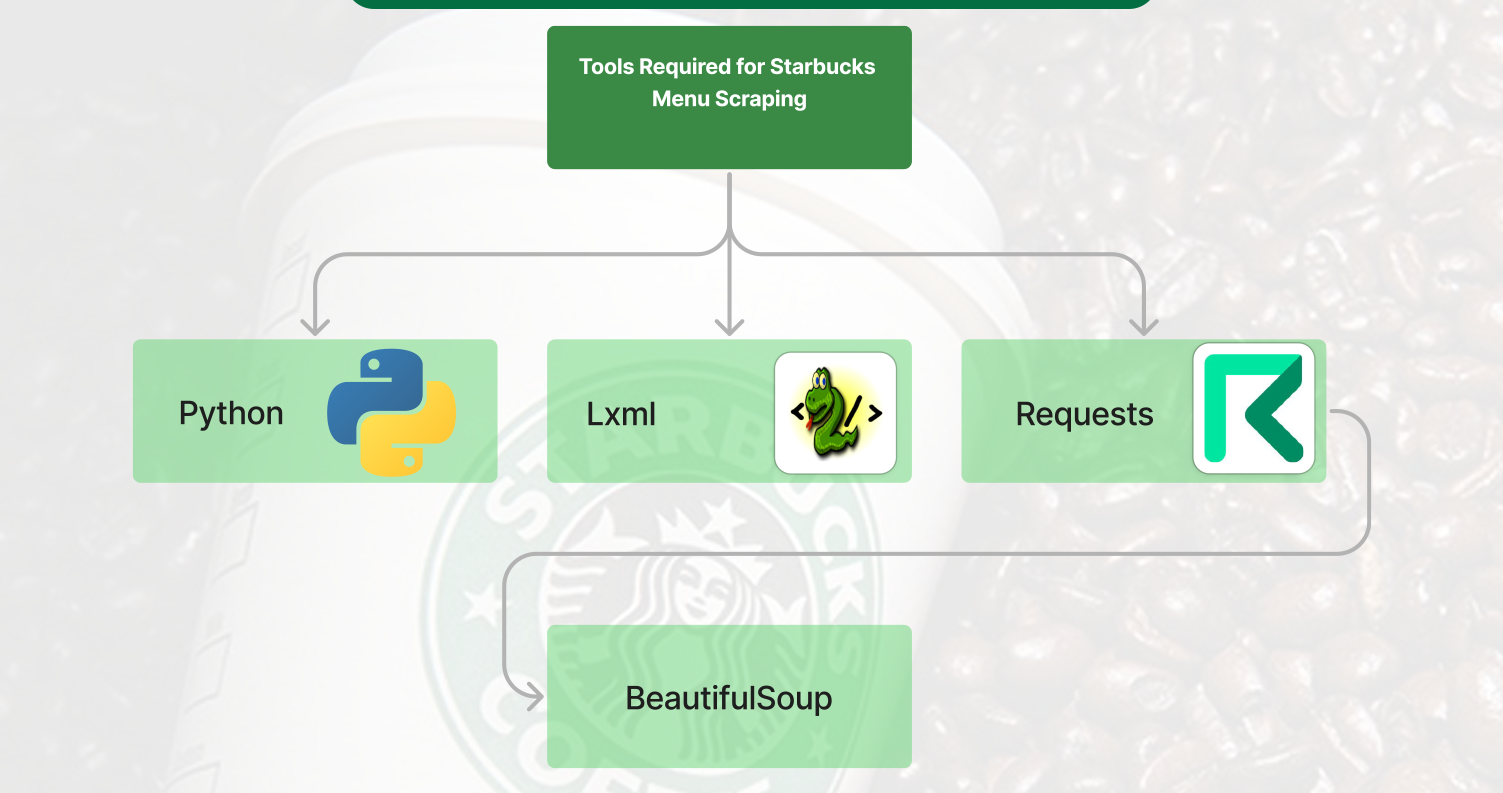

Tools Required for Starbucks Menu Scraping

Before embarking on the scraping process, it's essential to have the following tools at your disposal:

- Python: A robust programming language renowned for its web scraping and data extraction capabilities.

- Lxml: A highly efficient library for parsing HTML and XML, providing faster processing and enhanced performance.

- Requests: A simple yet effective tool for sending HTTP requests to retrieve page content.

- BeautifulSoup (Optional): Often used in combination with lxml, BeautifulSoup makes HTML parsing easier and more intuitive.

To get started, installing the necessary libraries is straightforward.

Execute the following command:

Pip install lxml requests beautifulsoup4Steps to Scrape Starbucks Menu Details Data Using lxml

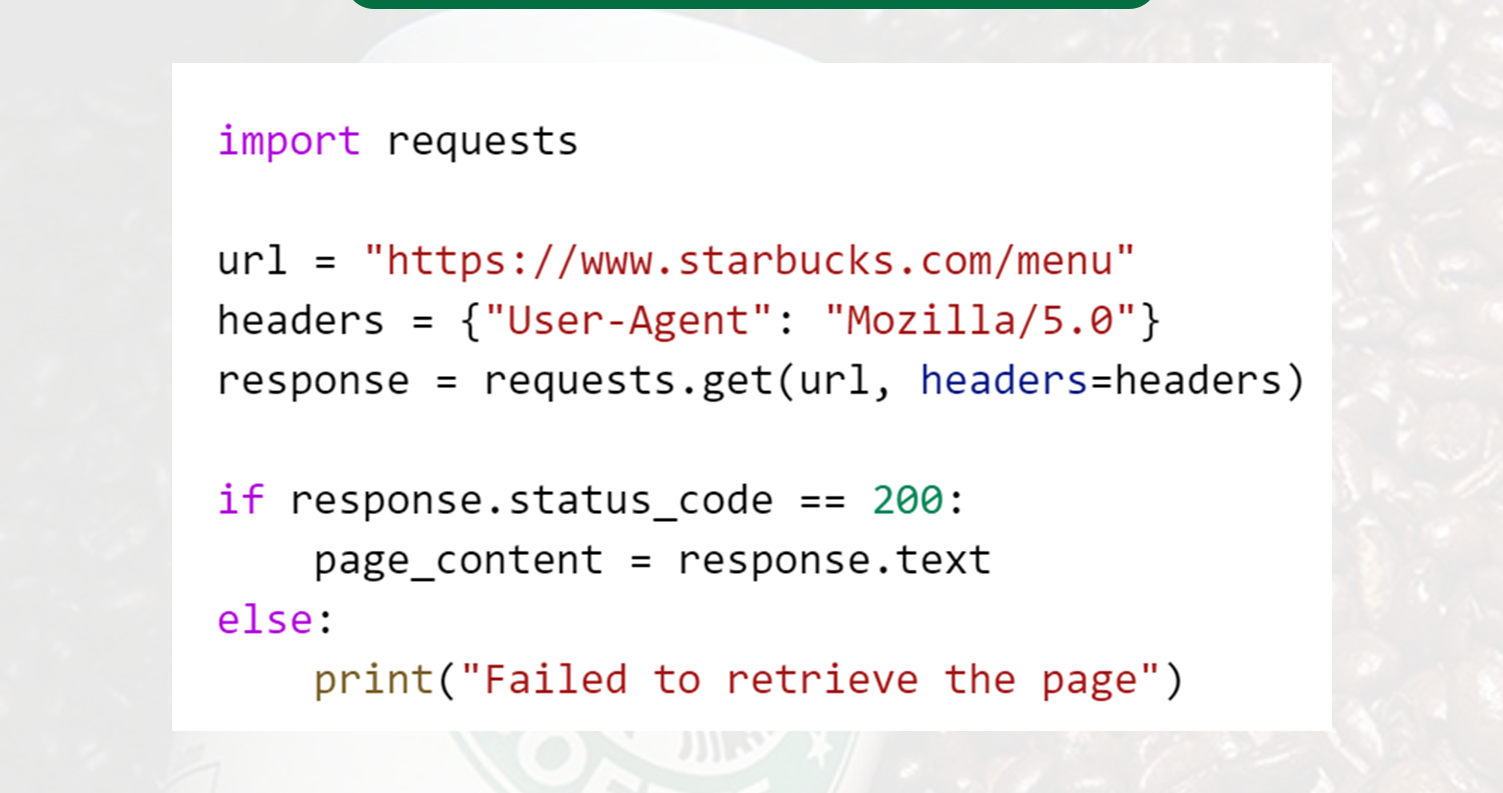

Fetch the Starbucks Menu Page

To scrape Starbucks pricing data, we must retrieve the Starbucks menu page. Using the requests library, we can send a GET request:

Appropriate headers are crucial to avoid getting blocked while scraping coffee shop data.

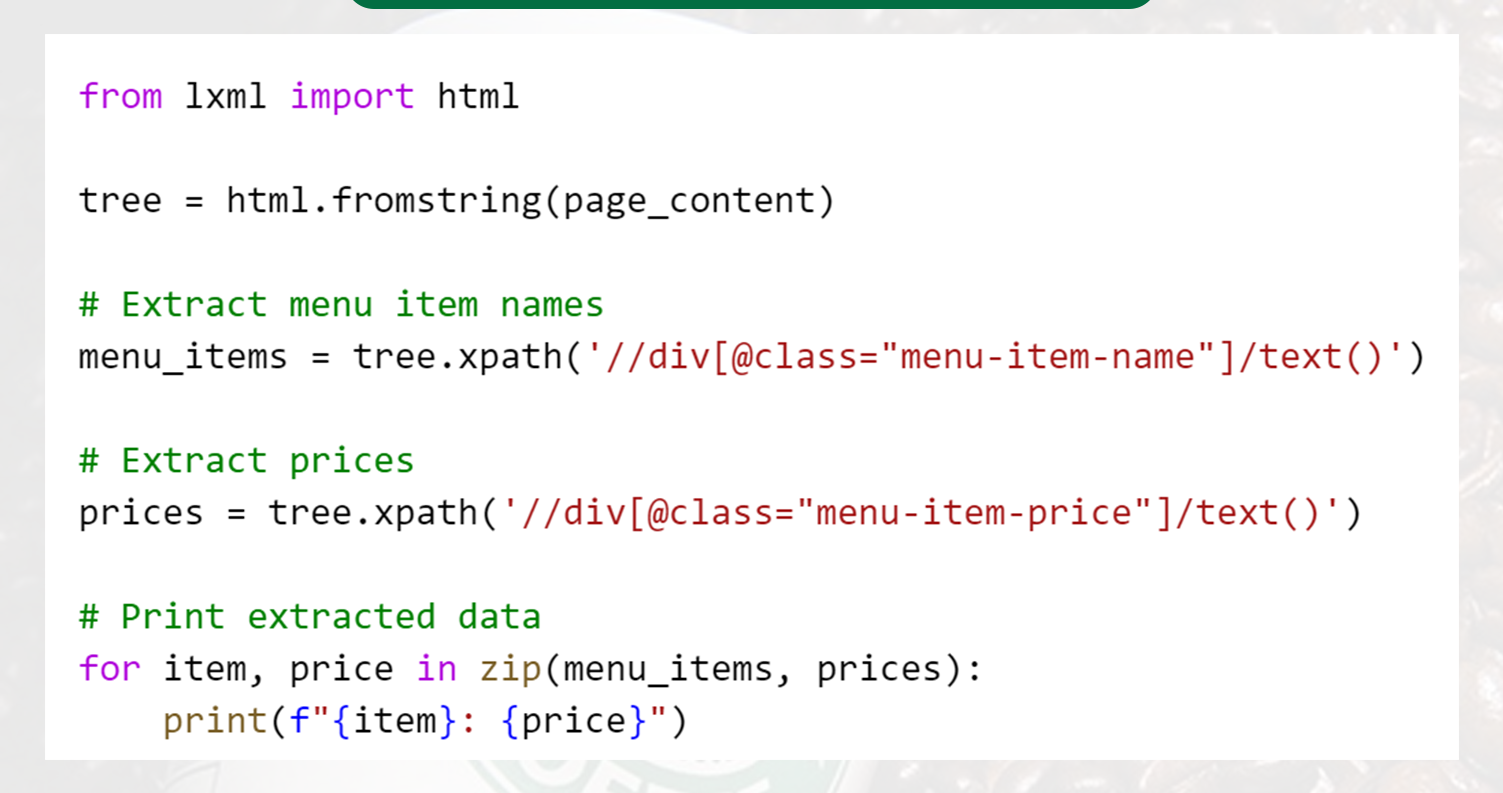

Parse the HTML Using lxml

Once we have the page content, the next step is to utilize lxml to parse and extract Starbucks menu data.

Here's how you can achieve this:

This approach ensures precise Starbucks menu scraping by accurately extracting the relevant elements from the page's HTML structure.

Handling Starbucks Store Data Extraction Challenges

When scraping Starbucks pricing data, several potential challenges may arise, including:

- Dynamic Content: Starbucks' website may utilize JavaScript to load data dynamically. In such instances, tools like Selenium or Puppeteer might be required to extract the information properly.

- CAPTCHA and Blocks: Frequent scraping requests can trigger IP bans. To mitigate this, it's essential to use proxies or implement rotating user agents to ensure uninterrupted access.

- Data Structure Changes: Starbucks may occasionally update its website layout, necessitating modifications to its scraping script to accommodate any changes in the data structure.

For more advanced Starbucks store data scraping, integrating a headless browser like Selenium can significantly enhance data extraction accuracy and reliability.

Comparing Starbucks API Data vs. Starbucks Website Data Scraping

Both approaches offer distinct advantages and drawbacks when comparing Starbucks API data and Starbucks website data scraping. Here’s a detailed breakdown of the features:

| Feature | Starbucks API Data | Starbucks Website Data Scraping |

|---|---|---|

| Data Accuracy | High (as it is official data) | High (provides real-time data extraction) |

| Data Availability | Limited to the data accessible through the API | Can extract all visible data available on the website |

| Authentication | Typically required for most API access | No authentication is required for data scraping |

| Customization | Limited to predefined fields provided by the API | Highly customizable, allowing extraction of various data points |

For those looking to scrape Starbucks menu details comprehensively, Starbucks website data scraping offers greater flexibility and the ability to extract a broader range of information.

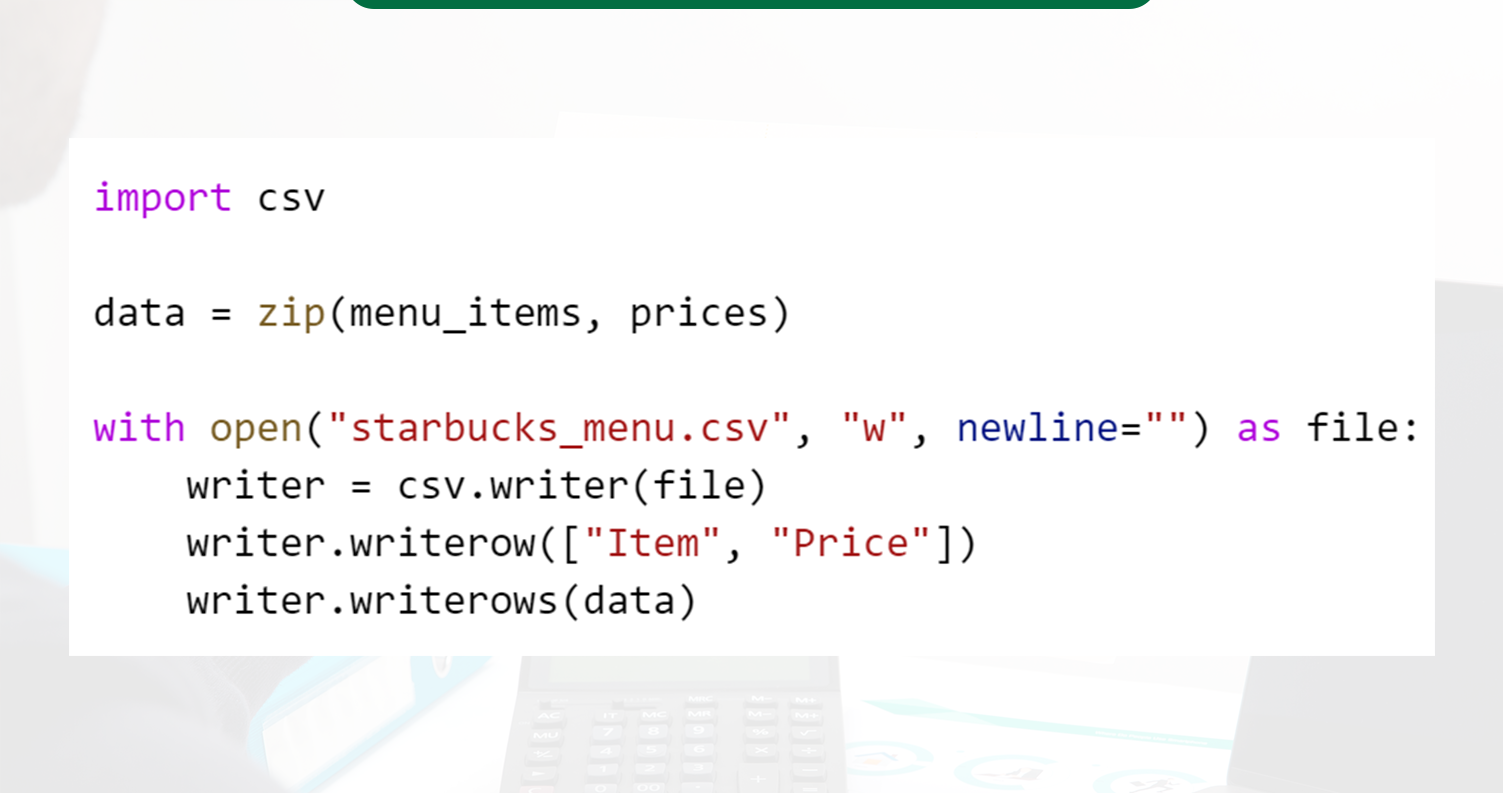

Storing the Starbucks Store Dataset

Once you extract Starbucks data, it's crucial to store it efficiently for easy access and analysis.

You can save the data in the following formats:

- CSV: This format is ideal for straightforward data analysis and quick reviews.

- JSON: An excellent option for integration with APIs and other applications, especially when dealing with structured data.

- Database: Storing the Starbucks store dataset in a relational database like MySQL or a NoSQL database like MongoDB is suitable for long-term storage and more advanced analysis.

Here’s an example of how you can save the extracted data to a CSV file:

Ethical and Legal Considerations in Starbucks Website Data Scraping

When conducting Starbucks website data scraping, it is essential to follow ethical and legal best practices to ensure compliance and responsible data usage:

- Check Robots.txt: Always review Starbucks' robots.txt file to determine which pages are permitted for crawling and which are restricted.

- Respect Rate Limits: Be mindful of request frequency to avoid overloading Starbucks' servers and ensure minimal disruption to their operations.

- Use Data Responsibly: Any scraped data should be utilized solely for analysis, research, or legitimate business purposes while strictly adhering to Starbucks’ terms of service to prevent potential violations.

If Starbucks API data aligns with your requirements, leveraging it would be a more ethical and sustainable alternative to direct website scraping.

Conclusion

Web Scraping Starbucks with lxml is a practical approach for extracting accurate menu data. Whether you're looking for real-time pricing, ingredient details, or store availability, Python and lxml can extract Starbucks data without depending on restricted APIs.

Scraping remains an essential solution for businesses that require comprehensive Starbucks store data extraction. However, implementing Starbucks website data scraping requires ensuring ethical considerations and maintaining legal compliance.

Are you looking to automate Starbucks pricing data scraping for your business? Our experts can help you build a reliable and scalable data extraction pipeline. Contact Mobile App Scraping today to optimize your Starbucks data collection and enhance your analytics with accurate real-time insights!